What is Deep Learning?

Note: Segmentation using Deep Learning requires the Deep Learning extension to the 2D Automated Analysis module. The Image-Pro Neural Engine must be installed. Installing the Image-Pro Neural Engine

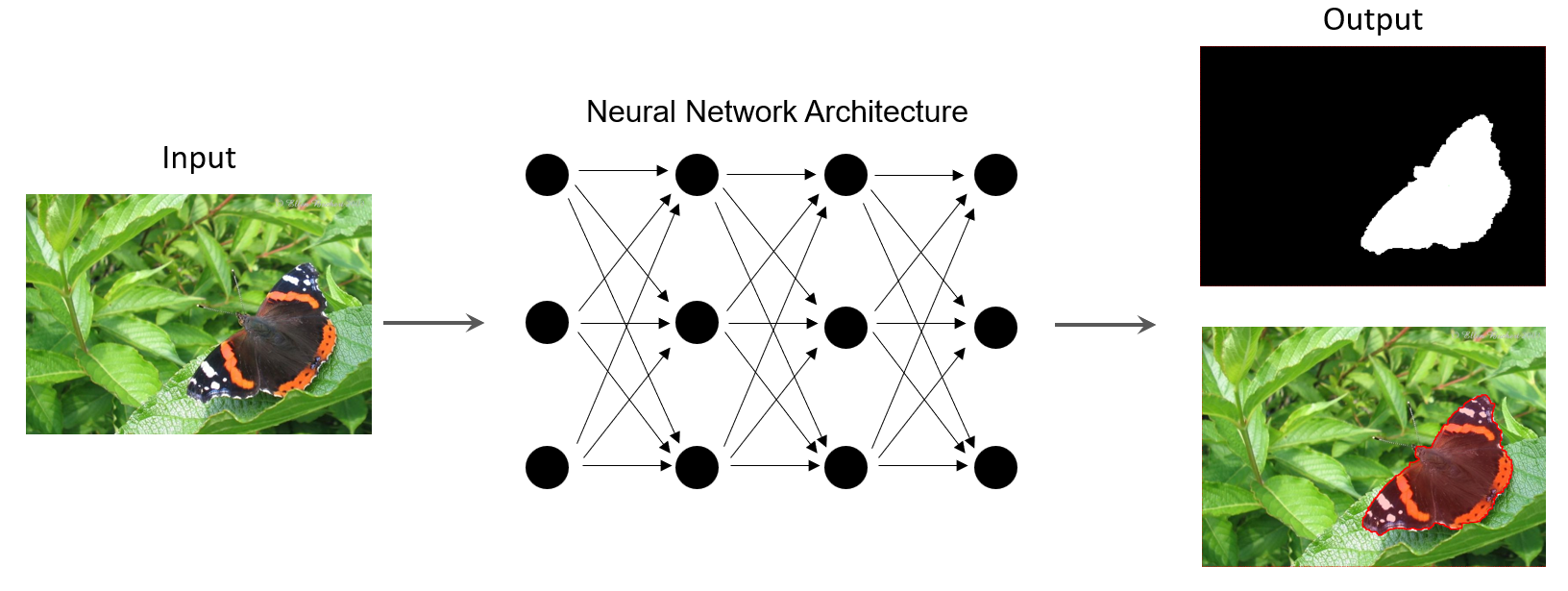

Deep learning is a form of artificial intelligence (AI) that uses multi-layered neuronal networks that function very similarly to the biological neural connections of our brain in order to learn from large amounts of data. The 'Deep' element of this name is a reference to the multiple layers of these neural networks. Multi-layered neural networks have an input layer, in the context of Image-Pro this is an image, a series of hidden layers that analyze the image, and an output layer, which in the context of Image-Pro is a segmented image.

Deep learning neural networks are excellent at completing tasks that visually appear simple (with the benefit of a visual cortex), but that were previously computationally extremely complicated or impossible, such as finding each instance of an object of interest against a complex background (finding the butterfly in the image above is a great example of this kind of task).

Deep Learning requires a significant amount of powerful parallel computing power. Graphics Processing Units (GPUs), originally designed to graphically display objects in three dimensions have proved particularly well suited to this task. A supported GPU should be considered an essential component to utilize deep learning with Image-Pro.

Image-Pro has access to various neural network architectures, instances of each architecture is known as a Model. Both pre-trained models (which are ready to use), and untrained models (which require additional work, or training to make them useful) are available.

Segmentation

In order to perform segmentation using deep learning, select the Count/Size ribbon, and click on the AI button from the Segmentation tool group to open the AI Deep Learning Prediction panel.

-

Click Load. The Open Model dialog opens.

Use the Category drop-down to show only Favorites, Image-Pro AI Models(default models), or My Trained Models(models that you have made and trained). You can also filter models by Architecture or Industry. Select a model and click Open. The model will open in the AI Deep Learning Prediction Panel.

-

Click Predict Preview to find all objects in the active image without applying either Ranges or Split functions.

-

Click Count to find objects in the images with Edit Measurement Range and Split applied.

For further details of working with models of each architecture supported in Image-Pro, follow the links below.

Finding Objects with Cellpose Models

Finding Objects with Stardist Models

Image Segmentation using UNET Models

Computer Settings and Deep Learning

GPU or CPU

For Deep Learning prediction and training we recommend the use of a GPU that meets the deep learning system requirements. If you do not have access to a computer with a recommended GPU, set the deep learning options to run on the CPU.

Note: CellPose flows are computed slightly differently on CPU and GPU, so you should expect subtly different outcomes when switching between these hardware options. CellPose GitHub.

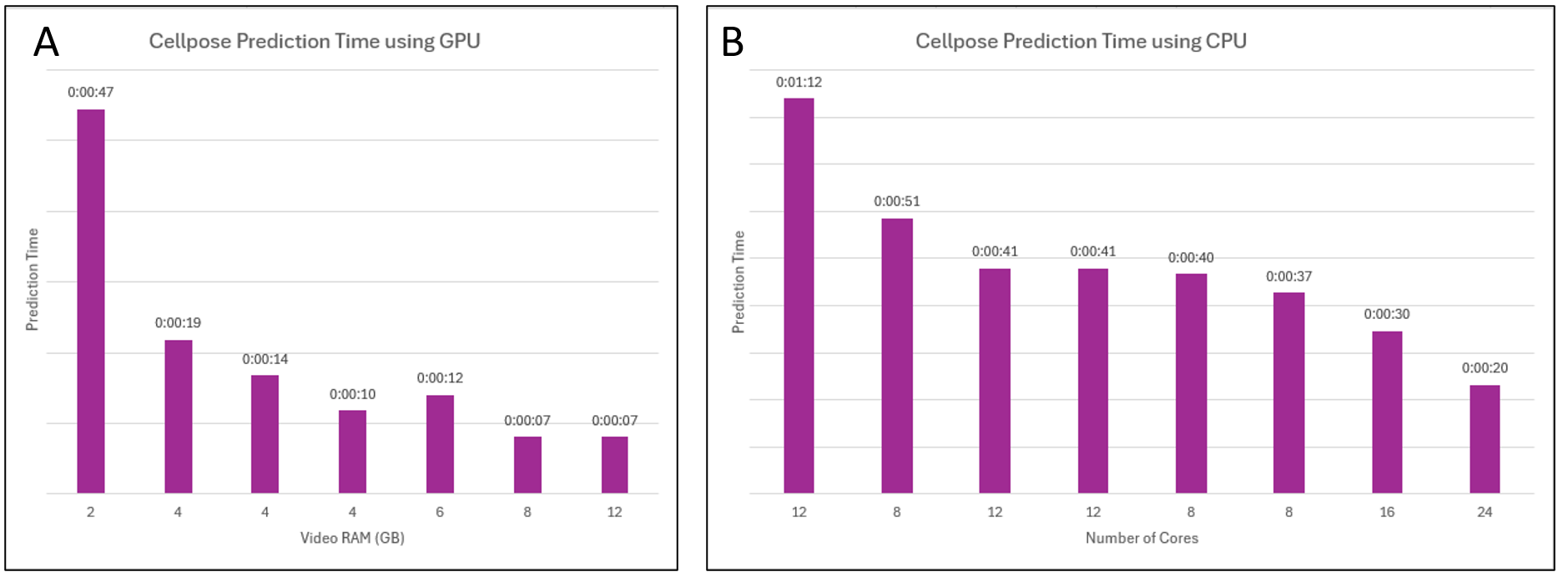

Deep Learning prediction using CellPose models is greatly accelerated when done on a supported NVidia GPU, as shown in bar charts 1A and 1B.

Bar Charts 1A and 1B showing the time to predict objects in a 1104 x 1104 image using a Cellpose model on a selection of computers. A) Prediction times using GPU. The mean prediction time is 17 seconds. Shorter prediction times are seen with GPUs with more video RAM. B) Prediction times when using CPU. The mean prediction time is 42 seconds.

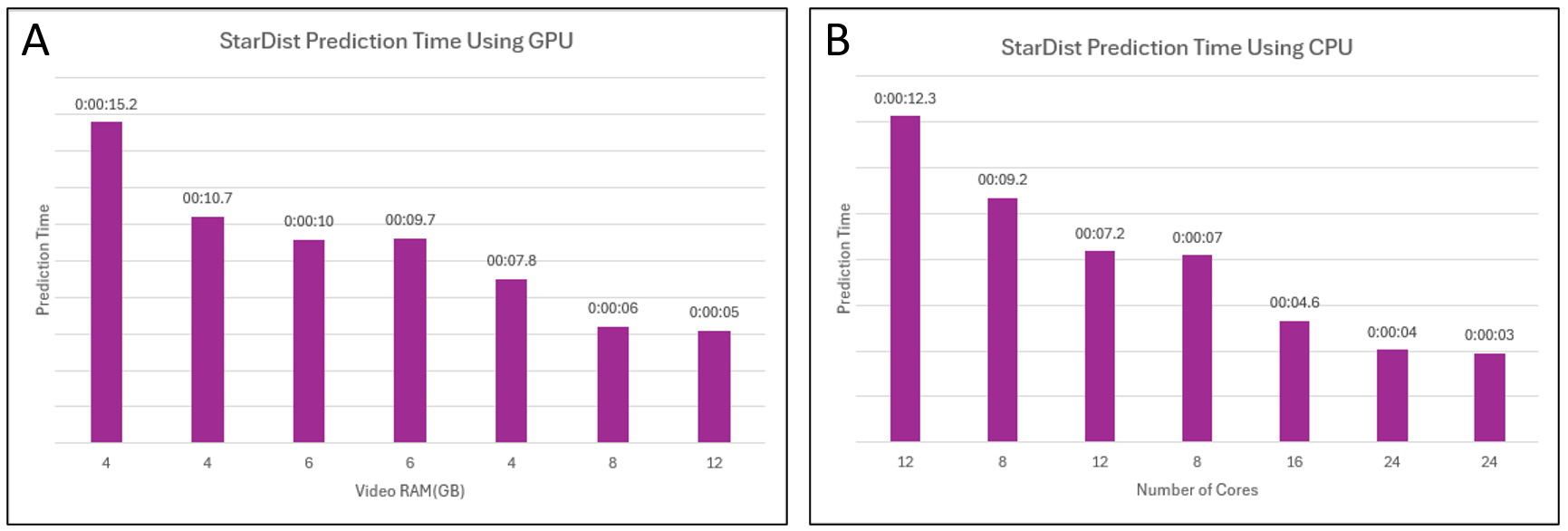

For StarDist and UNET models prediction times are similar on GPU and CPU, as shown in bar charts 2A and 2B for StarDist.

Bar Charts 2A and 2B showing the time to predict objects in a 1104 x 1104 image using a StarDist model on a selection of computers. A) Prediction times using GPU. The mean prediction time is 9 seconds. B) Prediction times when using CPU. The mean prediction time is 7 seconds.

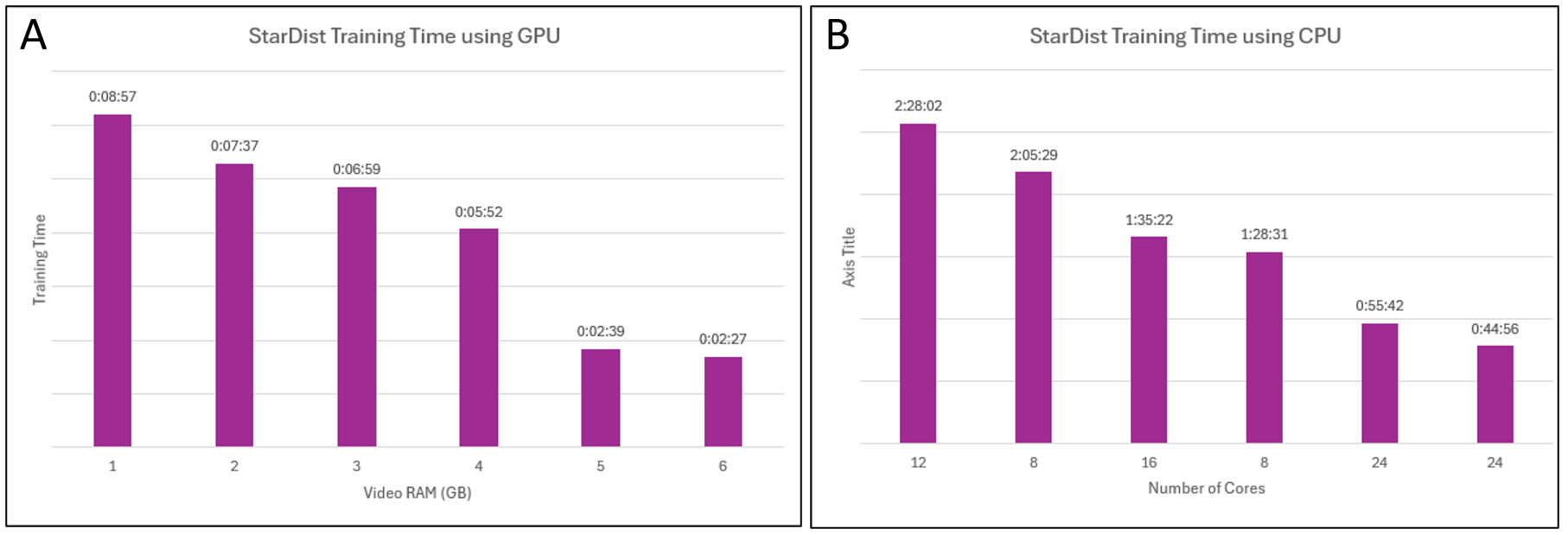

For all architectures, training times are greatly accelerated when done on a supported NVidia GPU, as shown in bar charts 3A and 3B for StarDist.

Bar Charts 3A and 3B showing the time to train a StarDist Model on a selection of computers. A) Training times using GPU. The mean training time is 5 minutes and 45 seconds. B) Training times when using CPU. The mean training time is 1 hour and 33 minutes

Thermal Management

The speed of deep learning prediction and training can vary greatly depending upon your computer's settings.

For example, settings made in the Thermal Management settings made in the Dell Power Management App can slow steps from minutes to hours.

A benchmark test of training a Cellpose model from scratch with 4379 labels for 10 epochs on a laptop computer equipped with a Nvidia GeForce RTX 2060 with 6GB of dedicated video memory is shown in the table below.

| Thermal Management Setting | Training Time (10 epochs) |

|---|---|

| Cool | 62 minutes 20 seconds |

| Quiet | 9 minutes 51 seconds |

| Optimized | 9 minutes 12 seconds |

| Ultra Performance | 9 minutes 19 seconds |

Note that the training time is highly extended with the Cool setting. If training were extended for the more usual 500 epochs, this training could take a period of days to complete. We strongly recommend that you check your computer's documentation to ensure that no settings that throttle performance are applied during training.

Learn more about all features in Count/Size